Hello colleagues,

We hope this message finds you well through these accelerating times!

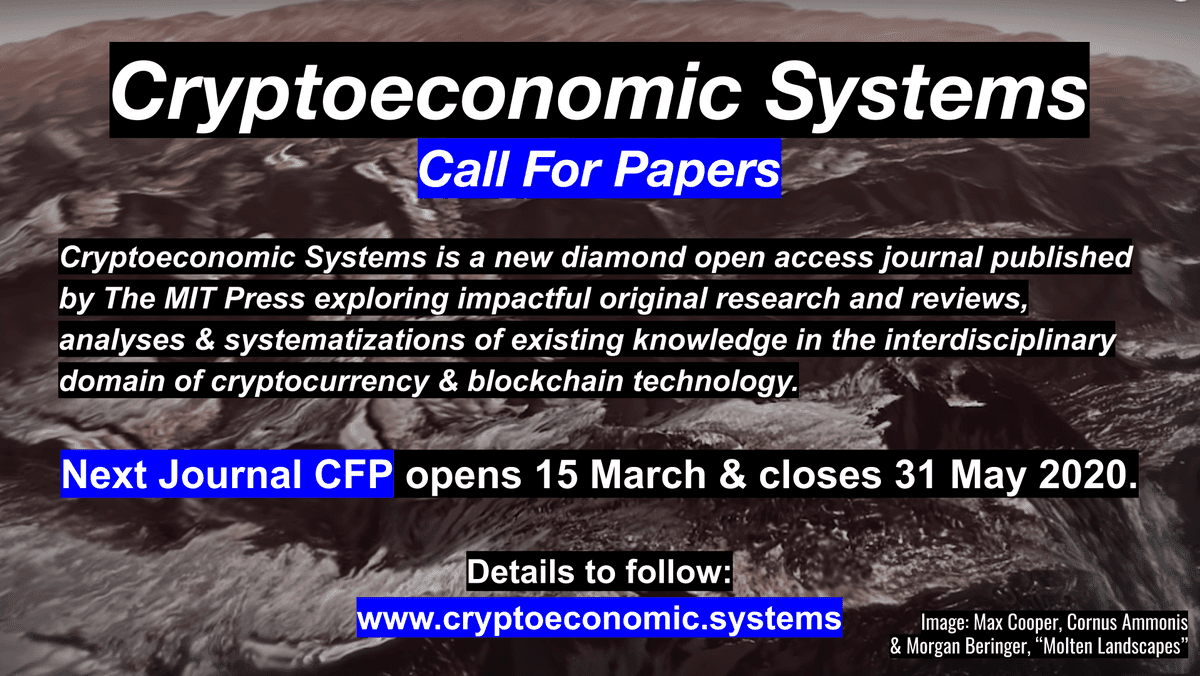

This is a time-critical update: our first journal Call for Papers is due to close at the end of this month! Please consider it as a venue for your work and help us push forward this interdisciplinary experiment by sharing the information with your colleagues:

Our submission portal is here: https://jcryptoeconsys20.hotcrp.com/

Topics of Interest:

Topics of interest include all contexts of cryptoeconomic systems with emphasis on computer science, economics and law. Interdisciplinary approaches are particularly encouraged. Here are some topics that the editorial team wish to highlight:

Analysis of blockchain protocols

Anonymity, privacy, and confidentiality

Applications using or built on Bitcoin

Bitcoin protocol and extensions

Case studies (e.g. of adoption, attacks, forks, scams, …)

Cryptocurrency adoption and transition dynamics

Digital cash

Distributed consensus protocols

Economic and monetary aspects of cryptocurrencies

Economics and/or game theoretic analysis of cryptocurrency protocols

Economics of permissioned and permissionless blockchains

Empirical analysis of network and on-chain data

Forensics, monitoring, and fraud detection (performing and preventing)

Layer 2

Legal, ethical and societal aspects of digital currencies

Network resilience

New cryptographic primitives applied to cryptocurrencies or blockchains

Peer-to-peer networks

Philosophical scope and implications of digital money & virtual resources

Proof-of-work, -stake, -burn

Real-world measurements and metrics

Relation of cryptocurrencies to other payment systems

Scripting and smart contract analysis and tools

Transaction graph analysis

Usability and user studies

Verification

Other Cryptoeconomic Systems news brief:

We are seeking expressions of interest to host our flagship peer reviewed conference in Europe or Asia in 2021. Call for Hosts is here.

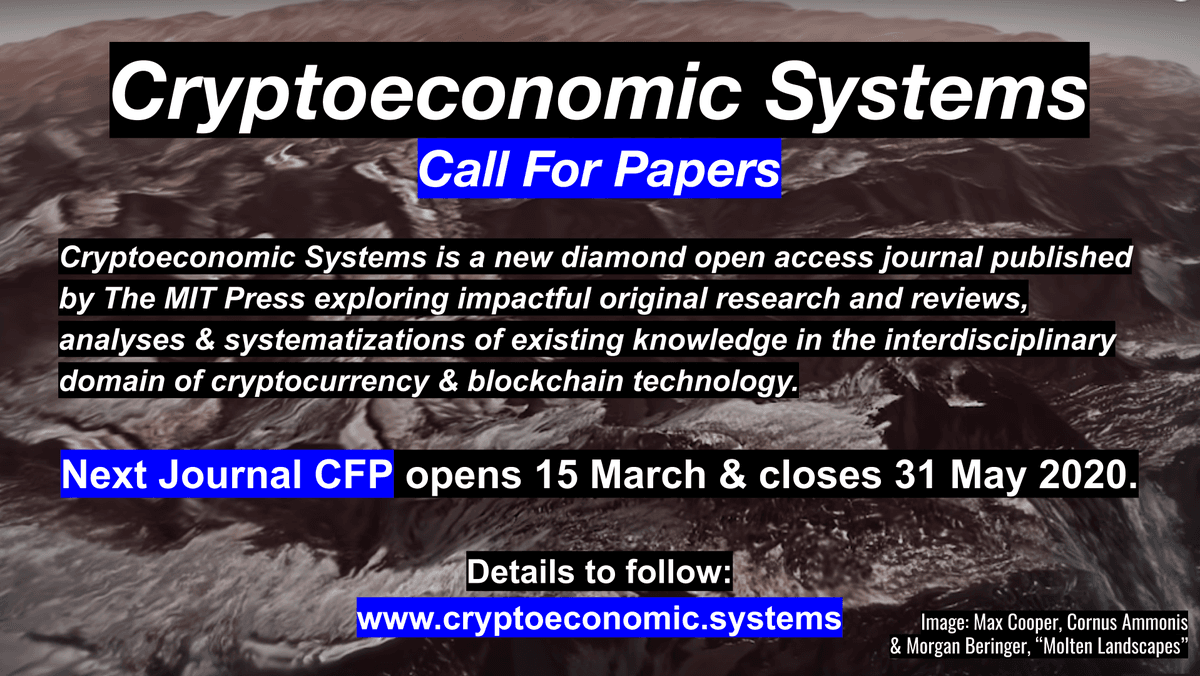

CES’20 presentation videos are on YouTube and full texts of papers are linked on the conference program.

Our editors Andrew, Neha and Wassim wrote this letter earlier in the year giving some background and context to the Cryptoeconomic Systems journey so far.

Our prototype of the first journal output will soon be ready for your perusal! Issue 0 is intended will be an open experiment in reformulating the traditional publication medium for our accelerating times.

Best wishes,

Wassim and the Cryptoeconomic Systems team

https://cryptoeconomic.systems